LIU Bin had been shifting between the industry and academic community before he became a PI of ZJ Lab.

He said his shifts were in line with algorithmic principle, that was, using past experiences to identify local optimal points, collecting more observations outwards, then trying to discover better local optimum or the global optimum.

LIU has dedicated to theories and algorithm for years. Algorithm has shaped his way of thinking and way of life, and led him to solve practical problems in many fields.

Go after your interest

Before joining ZJ Lab, LIU's research career was somehow cross disciplinary.

During his doctoral study, he worked with researcher HOU Chaohuan, academician of Chinese Academy of Sciences, on object tracking algorithm. In 2009, he went to Duke University, USA, for postdoctoral study, where he worked with Prof. James Berger, academician of the National Academy of Sciences, on Bayesian Statistics, particularly on Bayesian Algorithm. With statisticians of Duke University and astronomers of Cornell University, he worked out an advanced analytic algorithm to search for outer solar system terrestrial planets.

After returning to China, he worked in an innovative R&D institute and then a leading communications company, where he applied his knowledge to the solution of practical issues. Two years later, he returned to campus for basic researches on analytic modeling, machine learning and methods of optimization. After another seven years, he decided to join a leading Internet company, looking for AI algorithmic solutions for practical industrial challenges.

"Whether in the industry or the academic community, each part of my experiences have inspired me and triggered some thinking. And all the parts can be connected to a solid line." According to LIU Bin, an unsophisticated main track runs through his rich experiences: listens to heart and goes after interest to expand research perspectives and enhance perception.

LIU's interest in Bayesian Statistics stems from his doctoral study. "I applied an advanced algorithm to my doctoral study, which comes from Bayesian Statistics.” During his postdoctoral study in USA, he had chances for face-to-face communication with the world's top Bayesian statisticians, which solidified his interest in and perception of this area.

Thomas Bayes, an English mathematician in the 18th century, is known to the world for his research on probability statistics. His Bayesian Theorem had great influence on the development of modern probability theory and mathematical statistics.

"Bayesian Theorem is very concise. Like other physical laws simple in forms, it portrays the essential laws of the cosmos," said LIU Bin.

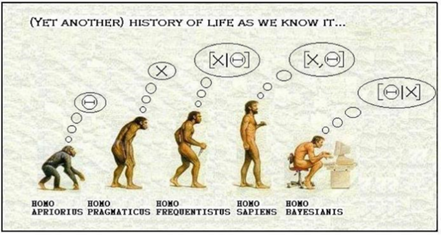

Human evolution may be described with concepts/terms in statistical model. The five stages are: modeling (to find the appropriate parameters to build a model), observation and data gathering, describing data generation based on model, establish joint probability distribution of model parameters and data, and analyzing data for backward reasoning and pattern discovering.

Bayesian Theorem describes a cognitive law, and the model objective is your "belief" in something. Such "belief" will change as the data accumulate.

"It's like a baby who seems ignorant in the beginning. As it observes, gathers and process data, he becomes "learned. Each time it absorbs something new, its perception updates." LIU Bin explained that such dynamic perception update can be described with Bayesian Theorem. Bayesian methodology is widely applied in AI, biology, engineering, perception and neuroscience.

The Bayesian power

Concise yet powerful, Bayesian Theorem captured LIU Bin's mind and steered his research for the past decade.

Based on Bayesian dynamic multi-model integration theory, LIU designed a new algorithm for intelligent recommendations, which was applied to the product line of the Internet company he worked in, elevating its core business indicators by 10%.

With algorithm models, APPs can calculate and recommend different products to different users. However, user appetite may change over time, diminishing the preciseness of the model. Bayesian dynamic multi-model integration theory allows many models to work together. When data indicate user behavior is more close to a certain model's hypothesis, the system will automatically give this model more weights, thus the algorithm will generate recommendations closer to user's real-time preference and demand.

LIU Bin said a model is an expert who estimates or assists us in decision making. If we rely on one single expert, we have to accept the output and we can't evaluate whether the conclusion is uncertain or robust. If we have multiple experts, each of them specializes in specific area or areas and gives decisions at different points of time, we can use Bayesian law to weigh their decisions in a dynamic and real-time manner, making the final conclusion more accurate and reliable.

Bayesian dynamic multi-model integration theory provides a powerful modeling and algorithmic design framework for dynamic system's robust learning and prediction. Besides the above Internet business scenarios, another typical application of the theory is invasive BCI.

In BCI system, the computer decodes the EEG transmitted by the Utah array electrode implanted in human brain to estimate the host's purpose and then assists the host to realize such purpose such as moving his/her artificial limb. Because of the instant noise and changes in neural plasticity when the brain controls physical moves, EEG is usually unstable, thus reducing the long-term effectiveness of the decoding model.

After applying Bayesian dynamic multi-model integration to the non-stable neural decoding, we can use the Bayesian belief updating to dynamically choose and combine multiple models to rapidly adapt to changes in EEG. This is much more effective than previous decoding methods.

Discover the potential of deep learning

Obviously, whether in the industrial or the academic world, LIU Bin's research is always driven by his desire to learn about the working mechanism and scientific principles behind various theoretical algorithms.

In the Internet company, he noticed that despite the wide application of deep learning, its operating principle remains unclear.

"Deep learning is targeted at mega data. A deep learning model has a billion parameters. Model designers usually shape a framework and then they are out. Parameter connection and parameter weights are figured out by algorithm and strong computing strength. From original data input to end-to-end prediction output, the process of deep learning is like a 'black box'," said LIU Bin.

The bottleneck is that, due to the "black box" attribute, present deep learning algorithm relies on high-quality, labeled data and strong computing strength, thus is robust, unexplainable, and not reliable enough.

"As a researcher, when using a deep learning tool, I’d ask many questions out of instinct. I want to make everything clear. For example, a deep learning model says it may rain tomorrow, I would want to know whether it's 99% or 60% possible. In particular, in safety-related decision-making, we need to know algorithm's conclusion's reliability and uncertainty.”

LIU Bin wishes to offer new thoughts and solutions for the weaknesses of the current deep learning technology, for example, combining the strengths of Bayesian dynamic multi-model integration theory and deep learning to make deep learning framework capable for uncertainty reasoning and giving more reliable results.

This is why LIU Bin chose to leave the industrial world and join ZJ Lab. Here, he could engage in frontier basic theory algorithm, in-depth systematic thinking, apply profound math tools and precise math language, explore the world in a scientific way, and tap the potential of deep learning and AI.

LIU Bin's team is attracting more and more scientists aspiring to be engaged in frontier basic research. Dr. WANG Lele, a former algorithm expert in an Internet company, is one of them.

"For a company, when an algorithm meets the precision requirement, say 95%, of a business, it won’t invest more resources to refine it, nor would it like to know how this number is achieved. Instead, resources are immediately diverted to another business. In fact, knowing why leads to new insights, and we may be able to improve from 95% to 96% or even higher. Here in ZJ Lab, I can devote myself to my research. I identify a target and I go for it,”said WANG Lele.