From December 10 to 15, NeurIPS (Conference on Neural Information Processing Systems), the global AI flagship conference, was held in Vancouver, Canada. The conference covers a wide range of intelligent computing research fields, including machine learning, deep learning, and neuroscience. At this conference, the paper "Towards an Information Theoretic Framework of Context-Based Offline Meta-Reinforcement Learning" was accepted as a Spotlight paper for NeurIPS 2024 (with an acceptance rate of 2.08%). The first author of the paper is LI Lanqing, a research expert at ZJ Lab and a part-time Ph.D. student at the Chinese University of Hong Kong (CUHK), supervised by Professor Pheng Ann Heng from the Department of Computer Science and Engineering at CUHK. ZHANG Hai, a master's student at Tongji University, is the co-first author, with Professor ZHAO Junqiao as the corresponding author.

Paper link: https://openreview.net/pdf?id=QFUsZvw9mx

This research systematically presents a theoretical framework called UNICORN (Unified Information Theoretic Framework of Context-Based Offline Meta-Reinforcement Learning), which focuses on task representation learning in the context of reinforcement learning. UNICORN uses the same mutual information based on task representation as an optimization objective, integrating key mainstream methods in the field of reinforcement learning, and achieving breakthroughs in the areas of offline meta-reinforcement learning.

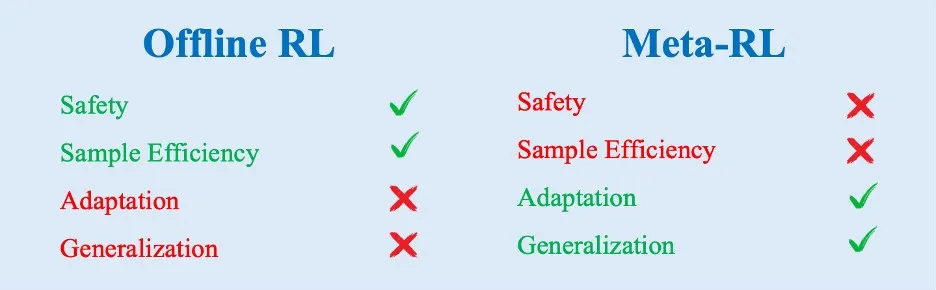

In traditional reinforcement learning, an agent collects feedback through real-time interaction with the external environment, accumulating experience through trial and error. However, in many real-world scenarios, such as autonomous driving and disease treatment, real-time data collection can be costly or infeasible due to safety concerns. As a result, researchers have begun exploring "offline reinforcement learning", a method that learns solely from historical data. On the other hand, the complex and dynamic nature of real-world scenarios demands that agents possess the ability to handle multiple tasks, a capability known as "meta-reinforcement learning" (meta-RL).

To leverage the advantages of both approaches, researchers began exploring ways to combine these two paradigms to train more powerful agents. A prominent example is the FOCAL algorithm, proposed by LI Lanqing in 2021. One mainstream method in this area is called "Context-Based Offline Meta-Reinforcement Learning" (COMRL), which enables intelligent systems to learn from offline experiences across past environments, thereby enhancing their ability to generalize in new environments.

In the COMRL framework, learning robust and effective task representations becomes a core issue, and the biggest challenge is contextual shift - data distribution shifts caused by differences in the tasks themselves or the sampling strategies. Existing mainstream methods often employ techniques such as metric learning and contrastive learning for empirical improvements of the algorithms. However, there is a lack of systematic theoretical support and algorithmic design guidance, particularly when it comes to task representation and addressing context shifts.

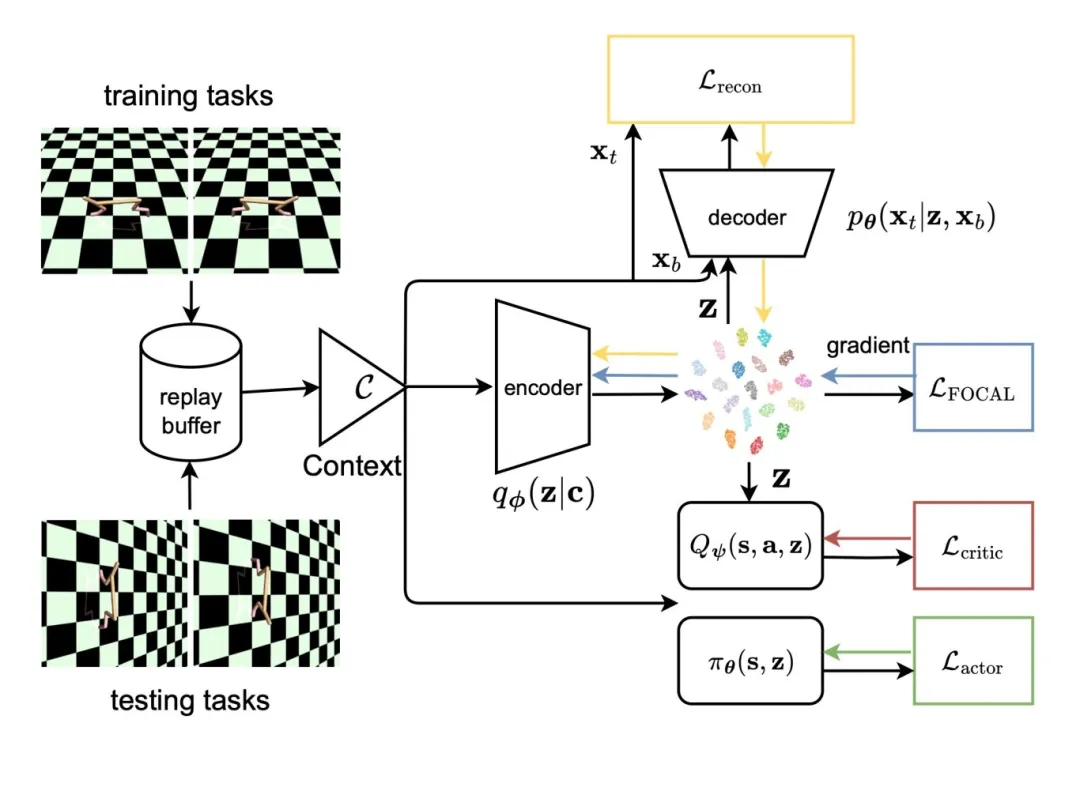

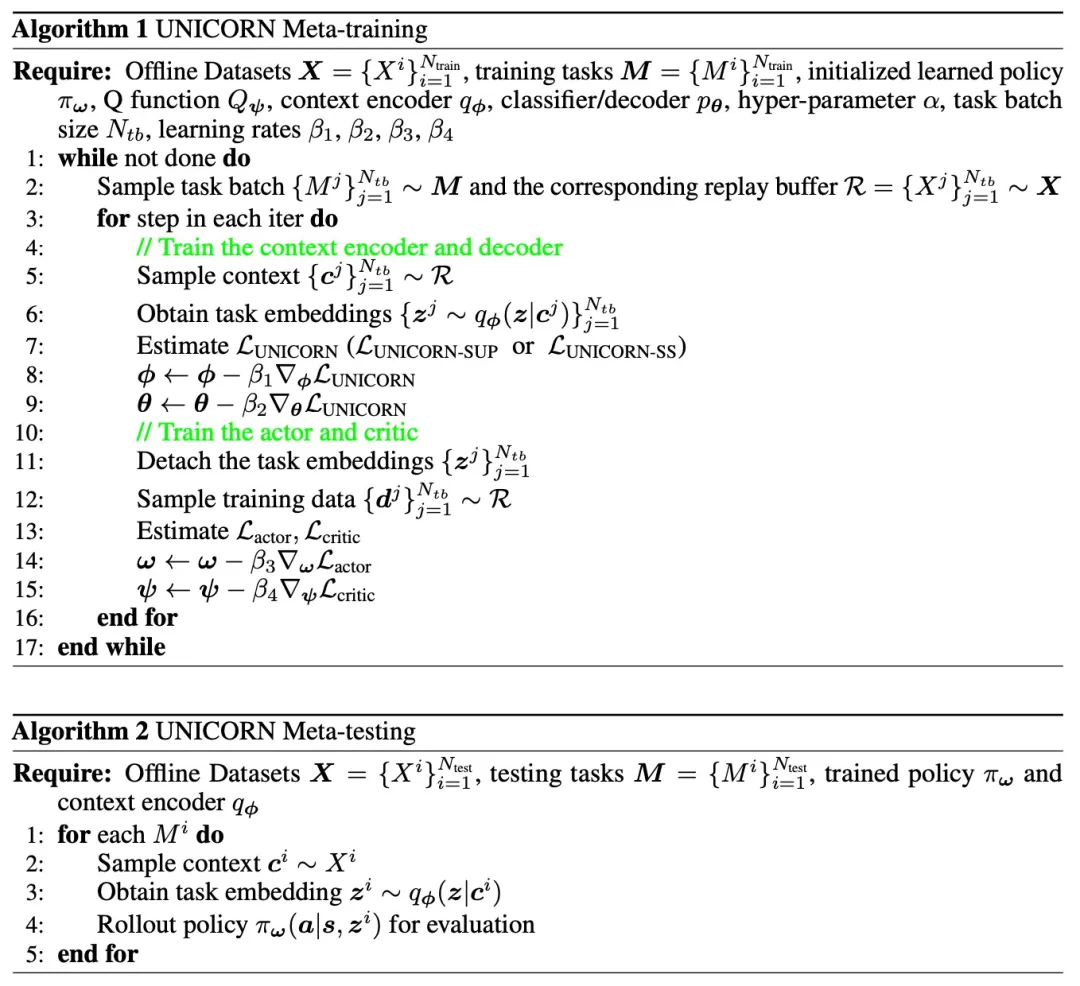

To address this issue, the UNICORN algorithm proposed in this research leverages information theory and systematically defines and deconstructs the task representation learning problem in COMRL from three perspectives: mathematical definition, causal relationship decomposition, and central theorem. Through rigorous theoretical proof, this research unifies the optimization objectives of existing methods and introduces two new implementations of the UNICORN algorithm: supervised and self-supervised versions.

Figure Caption: Algorithm Flow of UNICORN

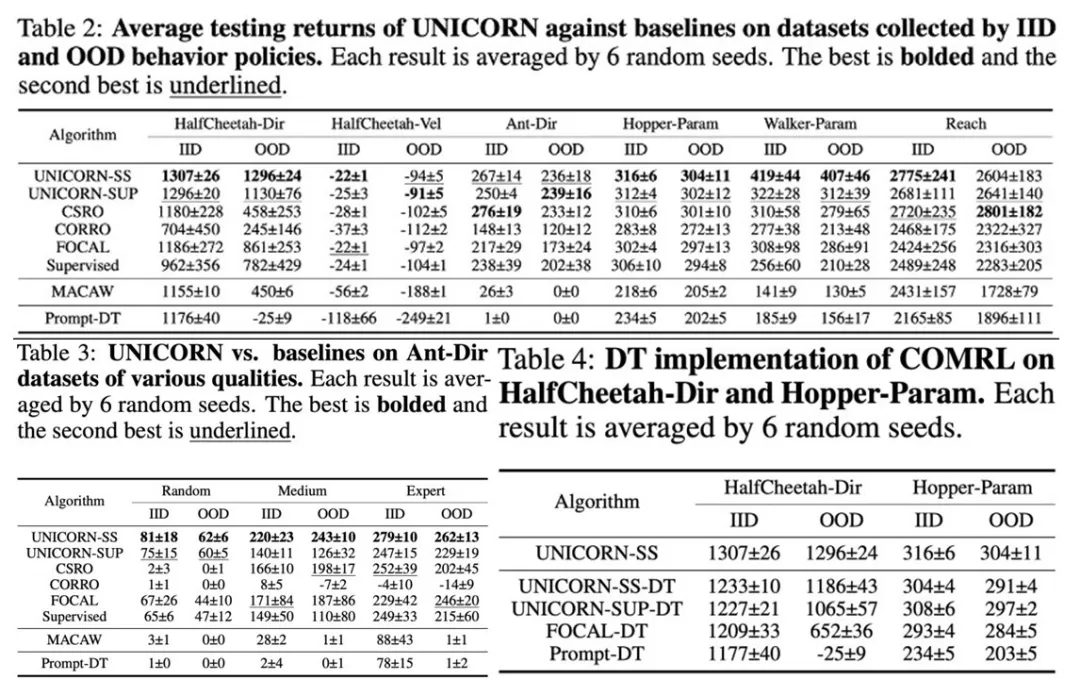

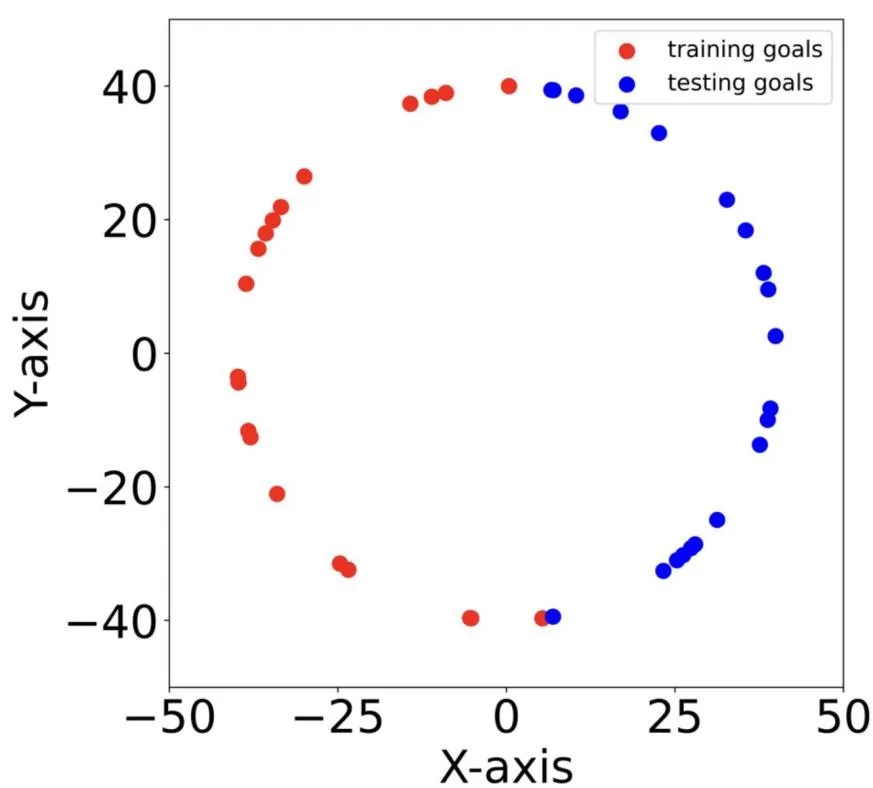

The generalizability of this theoretical framework has been extensively validated through experiments on various continuous control tasks for intelligent agents. Under multiple settings, including testing on the same or different distribution test sets, datasets of varying quality, different model architectures, and generalization to out-of-distribution tasks, UNICORN consistently outperforms or is comparable to existing methods.

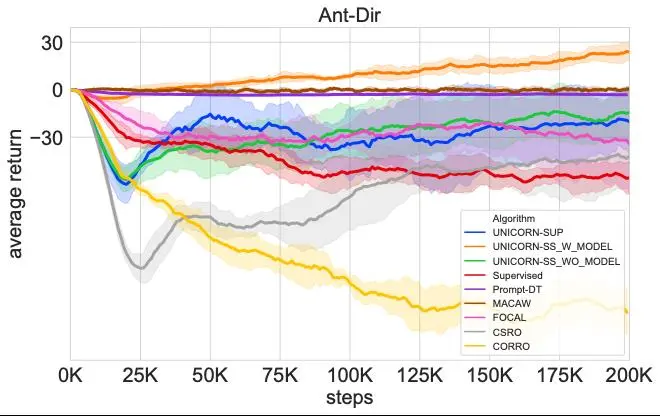

Figure Caption: The Two UNICORN algorithms (UNICORN-SS, UNICORN-SUP) demonstrate superior performance in extensive experimental testing compared to existing methods

Practical evidence shows that the UNICORN technology provides a unified theoretical foundation and algorithmic design guidelines for offline meta-reinforcement learning. It offers significant insights for large-scale offline pre-training and fine-tuning of decision-making models across multiple tasks. Additionally, it helps address challenges faced by AI models in cutting-edge fields such as drug design, precision medicine, and embodied intelligence, including poor generalization, multi-objective optimization difficulties, and low sample efficiency.

In the field of life sciences, this technology is expected to offer more efficient and robust pre-training and fine-tuning methods for biomolecular base models. It holds significant application potential in downstream tasks such as protein and nucleic acid drug sequence design and small molecule drug synthesis process design. Additionally, it will provide core technical support for the open computing platform for life sciences at ZJ Lab.