Prev Post

WANG Jian: It is Challenging to Formulate New Hypotheses Based on Data in the Era of AI for Science

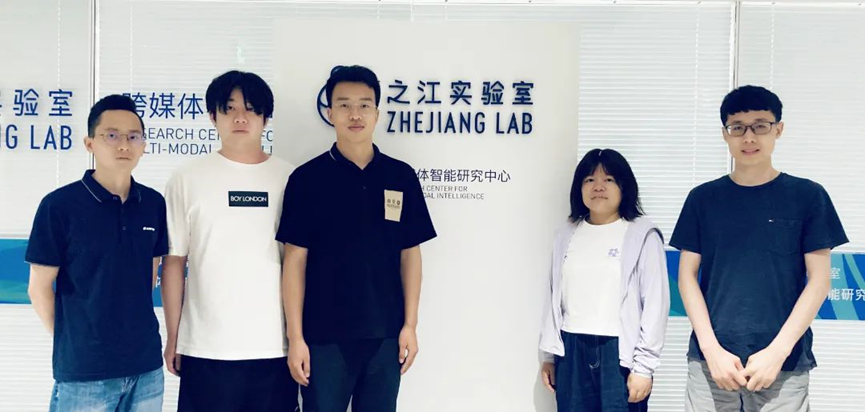

The 4th China AI Competition has recently concluded. A team from Zhejiang Lab's Research Center for Multi-Modal Intelligence excelled in the competition, securing an A-Level Certificate for their accomplishment in multimodal sentiment analysis.

The competition, jointly organized by the Cyberspace Administration of China, the Ministry of Industry and Information Technology, the Ministry of Public Security, the National Radio and Television Administration and the Xiamen Municipal People's Government, comprises eight sessions centered on algorithm governance and multimedia content recognition. A total of 185 teams from domestic Internet companies, AI companies, universities, research institutes, and other organizations signed up for the competition and submitted 323 entries. Ultimately, 12 teams won the A-Level Certificate.

This multimodal sentiment analysis competition distinguishes itself from a typical artificial intelligence competition by not providing training data. This implies that participating teams must possess not only robust algorithmic capabilities but also have the ability to collect and annotate massive data quickly and efficiently. The team from the Research Center for Multi-Modal Intelligence introduced an innovative modality-independent representation and training approach to diminish inter-modality dependence, and learned more about modality complementarity, thus greatly improving the performance of the emotion recognition system. It secured the top position in recognition accuracy, as indicated by the results unveiled during the multimodal sentiment analysis session.

"On the one hand, we can win the top honor thanks to our center's research in the field of multimodal affective computing such as vision, text and voice, which allows us to swiftly develop a solution after receiving the competition task. On the other hand, our extensive engagement in dataset construction has led us to create the world's largest Chinese dataset for multimodal emotion recognition. Based on this dataset, we authored an academic paper presented at ACL2023, a premier conference in the Natural Language Processing (NLP) field. All these efforts provide sufficient technical support for the improvement of algorithms," the participant said.

In addition to its efforts on multimodal affective computing and data, the Research Center for Multi-Modal Intelligence released the Affective Computing White Paper (bilingual edition) and a multimodal human-like empathic interaction system to the world. Oriented towards major national demands, the system solves the technical problems in the existing human-machine interaction system, such as insufficient multimodal sensing, poor empathic interaction effect, and significant delays in data sensing, memory and computing. Furthermore, breakthroughs in key core technologies for natural interaction and affective expression produce a virtuous circle between affective interaction and need satisfaction, and give rise to AI-enabled voice assistants, customer service robots and smart healthcare technologies, achieving remarkable economic and social benefits.